Oral Paper Presentation

Annual Scientific Meeting

Session: Plenary Session 2A - Functional / Esophagus

20 - ChatGPT-4.0 Answers Common Irritable Bowel Syndrome Patient Queries: Accuracy and References Validity

Tuesday, October 24, 2023

8:40 AM - 8:50 AM PT

Location: Ballroom A

Joseph El Dahdah, MD (he/him/his)

Cleveland Clinic

Cleveland, OH

Presenting Author(s)

Joseph El Dahdah, MD1, Anthony Lembo, MD2, Brian Baggott, MD3, Joseph Kassab, MD3, Anthony Kerbage, MD1, Carol Rouphael, MD1

1Cleveland Clinic, Cleveland, OH; 2Digestive Disease and Surgery Institute, Cleveland Clinic, Cleveland, OH; 3Cleveland Clinic Foundation, Cleveland, OH

Introduction: Artificial intelligence (AI) chatbots are becoming increasingly popular and likely to become frequently used by patients inquiring about health-related concerns. ChatGPT was introduced in November 2022 and recently updated to version 4. We sought to assess the accuracy of answers and references provided by ChatGPT4.0 to questions on irritable bowel syndrome (IBS), a diagnosis frequently queried online.

Methods: After reviewing the most frequently searched terms related to IBS on Google Trends, we formulated 15 questions on the topic. We entered each question into ChatGPT-4.0 in a separate chat log, asking the model to supply references for each generated answer. Accuracy of the AI's responses and provided references were then assessed by three independent gastroenterologists. Answers were evaluated using two grading systems: an overall grade (accurate vs. inaccurate) and a granular grade (100% accurate, 100% inaccurate, accurate with missing information, partly inaccurate). References were graded as suitable, unsuitable (existent but unrelated to answer), or nonexistent. We used free-marginal Fleiss kappa coefficients (κ) to quantify inter-rater agreement pre(κpre) and post(κpost) consensus discussions, which served to rectify any grading discrepancies. When disagreement persisted, the most stringent evaluation was accepted as the definitive grade.

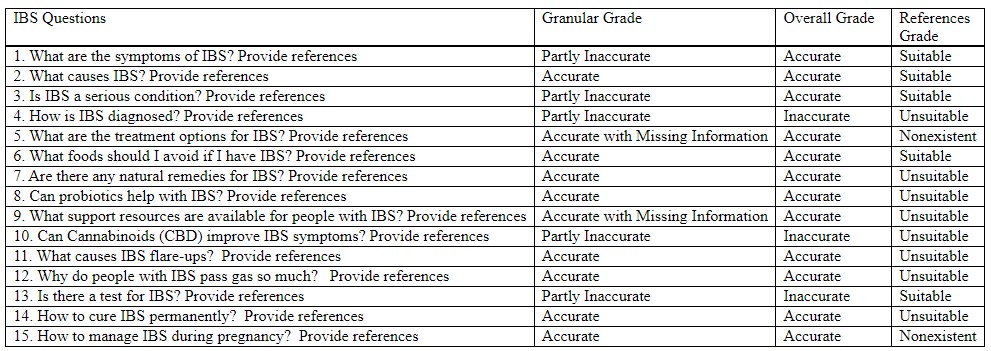

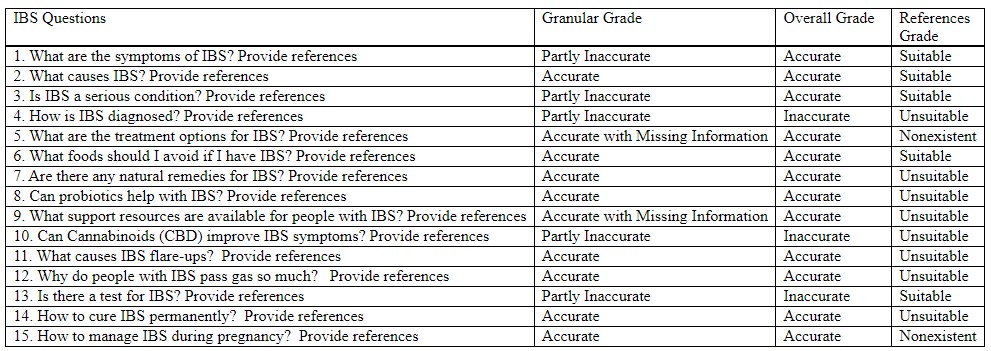

Results: Overall assessment showed 80% of AI answers were accurate and 20% inaccurate (κpre=0.82 [95% confidence interval CI 0.58-1.00], κpost=1.00 [95%CI, 1.00-1.00]). Granular grading showed 53% of answers were accurate, 33% partially inaccurate, 13% correct but incomplete and 0% completely inaccurate (κpre=0.38 [95%CI 0.14-0.62], κpost=0.88 [95%CI 0.72-1.00]). Provided references were suitable for 33% of answers, unsuitable for 53%, and nonexistent for 13% of answers (κpre=0.53 [95%CI, 0.27-0.79]/κpost=1.00 [95%CI, 1.00-1.00]).

Discussion: While overall accuracy was high at 80%, ChatGPT-4.0 still missed some details or provided outdated information. However, no fully inaccurate information was given, making this model a potential safe source for general guidance on common IBS queries. The model remains problematic for medical professionals when it comes to literature research and referencing as ChatGPT failed to provide a satisfying number of suitable references. These findings underscore the need for enhancing AI's precision and references validity in health-related information dissemination.

Disclosures:

Joseph El Dahdah, MD1, Anthony Lembo, MD2, Brian Baggott, MD3, Joseph Kassab, MD3, Anthony Kerbage, MD1, Carol Rouphael, MD1, 20, ChatGPT-4.0 Answers Common Irritable Bowel Syndrome Patient Queries: Accuracy and References Validity, ACG 2023 Annual Scientific Meeting Abstracts. Vancouver, BC, Canada: American College of Gastroenterology.

1Cleveland Clinic, Cleveland, OH; 2Digestive Disease and Surgery Institute, Cleveland Clinic, Cleveland, OH; 3Cleveland Clinic Foundation, Cleveland, OH

Introduction: Artificial intelligence (AI) chatbots are becoming increasingly popular and likely to become frequently used by patients inquiring about health-related concerns. ChatGPT was introduced in November 2022 and recently updated to version 4. We sought to assess the accuracy of answers and references provided by ChatGPT4.0 to questions on irritable bowel syndrome (IBS), a diagnosis frequently queried online.

Methods: After reviewing the most frequently searched terms related to IBS on Google Trends, we formulated 15 questions on the topic. We entered each question into ChatGPT-4.0 in a separate chat log, asking the model to supply references for each generated answer. Accuracy of the AI's responses and provided references were then assessed by three independent gastroenterologists. Answers were evaluated using two grading systems: an overall grade (accurate vs. inaccurate) and a granular grade (100% accurate, 100% inaccurate, accurate with missing information, partly inaccurate). References were graded as suitable, unsuitable (existent but unrelated to answer), or nonexistent. We used free-marginal Fleiss kappa coefficients (κ) to quantify inter-rater agreement pre(κpre) and post(κpost) consensus discussions, which served to rectify any grading discrepancies. When disagreement persisted, the most stringent evaluation was accepted as the definitive grade.

Results: Overall assessment showed 80% of AI answers were accurate and 20% inaccurate (κpre=0.82 [95% confidence interval CI 0.58-1.00], κpost=1.00 [95%CI, 1.00-1.00]). Granular grading showed 53% of answers were accurate, 33% partially inaccurate, 13% correct but incomplete and 0% completely inaccurate (κpre=0.38 [95%CI 0.14-0.62], κpost=0.88 [95%CI 0.72-1.00]). Provided references were suitable for 33% of answers, unsuitable for 53%, and nonexistent for 13% of answers (κpre=0.53 [95%CI, 0.27-0.79]/κpost=1.00 [95%CI, 1.00-1.00]).

Discussion: While overall accuracy was high at 80%, ChatGPT-4.0 still missed some details or provided outdated information. However, no fully inaccurate information was given, making this model a potential safe source for general guidance on common IBS queries. The model remains problematic for medical professionals when it comes to literature research and referencing as ChatGPT failed to provide a satisfying number of suitable references. These findings underscore the need for enhancing AI's precision and references validity in health-related information dissemination.

Figure: Fig.1. Visual Comparison of Reviewers' Assessments of ChatGPT-4.0 Responses to IBS Queries: A Clustered Column Chart

Table: Table 1. Questions posed to ChatGPT-4.0 and Reviewers' Evaluation of Responses

Disclosures:

Joseph El Dahdah indicated no relevant financial relationships.

Anthony Lembo: AEON Biopharma Inc. – Consultant. Alkermes – Consultant. Allakos – Consultant. Allurion – Stock Options. Anji Pharmaceuticals – Consultant. Arena Pharmaceuticals – Consultant. BioAmerica – Consultant. Bristol Myers Squibb – Stock Options. Gemelli Biotech – Consultant. Ironwood Pharmaceuticals – Consultant. Johnson & Johnson – Stock Options. Maunea Kea – Consultant. Neurogastrx, Inc. – Consultant. OrphoMed, Inc. – Consultant. Pfizer – Consultant. QOL Medical – Consultant. Shire, a Takeda company – Consultant. Takeda Pharmaceuticals – Consultant. Vibrant Pharma, Inc. – Consultant.

Brian Baggott indicated no relevant financial relationships.

Joseph Kassab indicated no relevant financial relationships.

Anthony Kerbage indicated no relevant financial relationships.

Carol Rouphael indicated no relevant financial relationships.

Joseph El Dahdah, MD1, Anthony Lembo, MD2, Brian Baggott, MD3, Joseph Kassab, MD3, Anthony Kerbage, MD1, Carol Rouphael, MD1, 20, ChatGPT-4.0 Answers Common Irritable Bowel Syndrome Patient Queries: Accuracy and References Validity, ACG 2023 Annual Scientific Meeting Abstracts. Vancouver, BC, Canada: American College of Gastroenterology.